Dynamic Jenkins Slave Provisioning on OpenShift

Posted: January 31st, 2016 | Author: sabre1041 | Filed under: Technology | 1 Comment »

In a previous post, Clustering Jenkins on OpenShift, we introduced how to run the Jenkins Continuous Integration server connected to a collection of slave instances that are used to run Jenkins jobs, all within OpenShift. The slave instances were configured as a pool of running Docker containers that are automatically discovered by the Jenkins master through the use of the Jenkins Swarm plugin. This pool could be scaled up or down as necessary and provides the ability to handle the most robust workload that is only capped by the resource limitations of the cloud infrastructure. While this approach gives administrators the flexibility to define the state of their infrastructure, it can result in wasted resources as slaves become idle waiting to take on incoming job requests. An alternative approach is for the Jenkins master to dynamically provision slave instances as necessary within the OpenShift environment. This process is facilitated by the Jenkins Kubernetes plugin and in a few short steps, Jenkins can be configured to run jobs using dynamically provisioned slaves or complement a set of existing statically defined slaves using the Swarm plugin as described earlier.

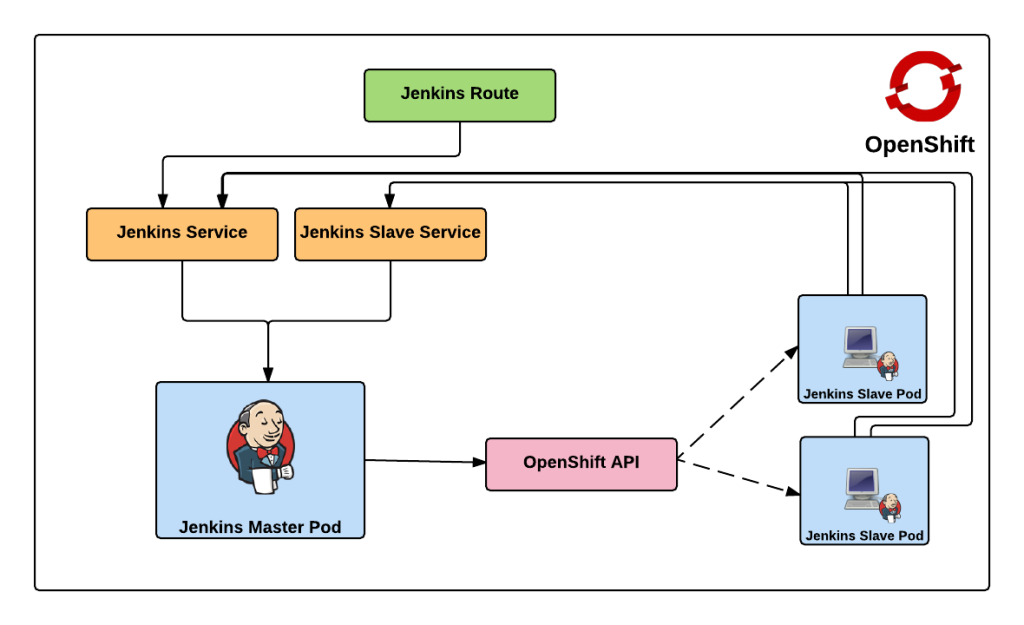

Prior to creating any resources within OpenShift, let’s discuss the components involved in being able to dynamically provision Jenkins slave instances.

Note: I will forego a discussion on the topics of Jenkins masters and slaves. I suggest reading the previous entry which provided an overview of these concepts.

When using the Jenkins swarm plugin as in the previous post, slave instances were provisioned by OpenShift in a predetermined manner similar to any other application deployment. The number of slave instances can be governed by project members or cluster administrators . These instances are configured with the parameters necessary to communicate with the Jenkins master, which in turned allowed them to register themselves to take on workload. The registration and discovery of the slaves does provide a substantial benefit to Jenkins administrators as they no longer needed to manually configure the slave instances within Jenkins. However, when working with the Kubernetes plugin, the responsibility to provision new slave instances belongs to the Jenkins master and is based on the current workload of the master. When the Jenkins master determines that there is a need for additional resources to perform jobs, it will utilize a preconfigured Docker image to perform the job. The plugin communicates with the Kubernetes (OpenShift) API to create a new container/pod. Using the parameters automatically specified by the plugin to start the container, the slave has all of the information that it needs to communicate back with the master to complete the registration and to perform the job. Once the job completes, the slave is then destroyed.

[…] part 1 and part 2 of the series on Jenkins and OpenShift, we used OpenShift as the execution environment to run a […]